Is AI Smarter Than an 8th Grader?

(Image credit: Sierra Elman)

“Write a poem about a sunrise.” I asked three AI chatbots—OpenAI’s ChatGPT-4, Google’s Bard, and Anthropic’s Claude—and myself—an 8th grade human. I then surveyed a panel of 38 AI experts and 39 English experts to judge the results. Is AI smarter than an 8th grader?

And the survey says…AI is not smarter than an 8th grader, at least not yet. The 8th grader won 1st place, and by a higher margin when judged by English experts. Bard, ChatGPT-4, and Claude came in 2nd, 3rd, and 4th places, respectively, both in writing quality and their ability to fool the judges into believing they were authored by a human. Most strikingly, English experts were far better at discerning which poems were written by AI, with 11 English experts vs. only 3 AI experts guessing the author (human vs. AI) of all four poems correctly. This points to a need for English experts to play a greater role in helping shape future versions of AI technology.

With the explosive popularity of large language models (LLMs), much has been written about AI claiming the roles of human writers, and with that the loss of authentic human creativity. Personally, I’ve been working on a creative writing project — a collection of short fiction pieces and poetry, a few of which I have submitted for publication.

Recently, in response to one of my submissions, an editor responded, “The meter is exceptionally sharp on this poem, which is unusual for high school students, let alone someone in eighth grade. Please sign this statement attesting you did not use AI in any way to write this poem.” I felt a strange combination of flattered and slighted, but most of all, startled.

I then decided to add an offshoot to my ongoing creative writing project — I wanted to take a closer look at how well AI can create authentic writing. For my study, I chose to focus on poetry. Unlike other AI-generated writing, poetry is significantly more challenging for AI to generate authentically. Harvard student Maya Bodnick found that AI-generated essays easily passed all her freshman year classes, for example. But unlike in essays, a major component of poetry is human emotion, which AI intrinsically lacks. Keith Holyoak in the MIT Press Reader writes that “poetry may serve as a kind of canary in the coal mine — an early indicator of the extent to which AI promises (threatens?) to challenge humans as artistic creators.”

The experiment

How well can AI write poetry? In February 2023, Walt Hunter in The Atlantic examined AI poetry, concluding that AI poems were clichéd and full of wince-worthy rhymes. I wanted to see how AI capabilities have changed, roughly a year later. Mainly, I wanted to learn more about the implications for the future of poetry, and of creativity in general. I was interested in three questions:

- Turing Test: Can people correctly detect when poems are generated by AI?

- Are poems generated by AI actually quality poems?

- Is there a difference in judgment between English experts and AI experts?

To analyze these questions, I surveyed 38 AI experts (AI engineers, product managers, and leads at OpenAI, Google, Apple, Amazon, etc.) and 39 English experts (English teachers, professors, writers, authors, etc.) in January 2024. The survey presented four poems generated on December 27, 2023 by, respectively:

- Anthropic’s Claude 2.1

- Google’s Bard (Gemini had not yet been released)

- Me, an 8th grade human (I did not want to choose a pre-existing poem that was searchable, or that respondents may have previously come across. Also, I did not try particularly hard and threw together the poem in roughly 15 minutes)

- OpenAI’s ChatGPT-4

(Full text of the poems can be found at the bottom of this post.)

The survey asked respondents to rate each poem on a scale of 1–10 in terms of perceived quality, and whether they believed the poem was written by AI or by a human. They did not know how many poems were written by AI or humans, and they did not know the author of the human poem.

They also did not know the prompt I provided the three AI chatbots. I chose sunrises as the subject since it seemed relatively simple for an AI chatbot to handle.

Turing Test: Can people correctly detect when poems are generated by AI?

The majority of respondents (89.6%) correctly detected that the human’s poem was human. And most people had a pretty good sense for which poems were written by AI. 18.2% of respondents correctly identified the author of 4/4 poems_._ Over half the respondents (58.4%) correctly guessed 3/4 of the poem’s authors. 18.2% correctly guessed 2/4 and 3.9% correctly guessed 1/4. Only one person (1.3%) guessed all four incorrectly.

Overall, 33.8% of respondents mistakenly thought the AI poems were human. When broken down by AI chatbot, Bard fooled the most people by far. 46.8% of respondents believed Bard’s poem was written by a human, compared to 29.9% for ChatGPT, and 24.7% for Claude, as shown by the green bars in Figure 1.

Figure 1

Are poems generated by AI actually quality poems?

The AI contenders fared better here, but still did worse than the human, which rated the highest on average. However, the human’s poem was followed more closely by the AI poems. Specifically, the human poem averaged the highest rating in quality at 6.9/10, followed by the poems generated by Bard at 6.2/10, ChatGPT at 5.8/10, and Claude at 5.4/10, as shown by the blue bars in Figure 1. The overall AI poems’ average quality rating was 5.8/10.

Respondents had a definite bias against AI

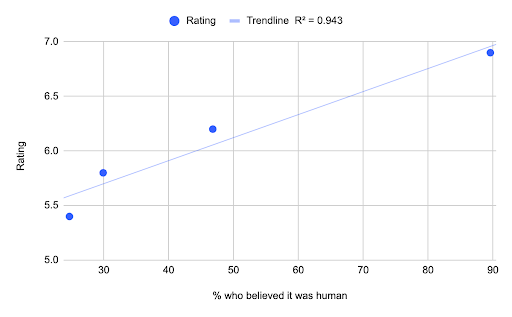

On average, if respondents believed a poem was written by a human, they would give the poem a higher rating in terms of quality. Specifically, as shown in Figure 2:

- Respondents who thought Claude’s poem was human rated it 1.79 points higher on average.

- Respondents who thought Bard’s poem was human rated it 1.9 points higher on average.

- Respondents who thought the ChatGPT poem was human rated it 1.95 points higher on average.

- Respondents who thought the human poem was human rated it 2.14 points higher on average.

In other words, either respondents would decide a poem was bad and therefore written by AI, or decide a poem was written by AI and therefore bad. (Note that respondents were asked to rate a poem first, and then guess if it was AI-generated, so it is more likely the former.) Either way, there was a definite bias against the perceived quality of AI writing.

Figure 2

Figure 3 illustrates the high correlation (R² = 0.943) between quality rating and the percentage of respondents who believed a poem was written by a human, for the four poems. (R² = 1.0 means a perfect correlation, and 0.0 means no correlation.)

Figure 3

Was there a difference in judgment between English experts and AI experts?

English experts were the least likely to be fooled

English experts were clearly better at discerning who wrote the poems. English experts correctly guessed the author of the poems an average of 3.13 out of 4 times, while AI experts were only able to do so 2.61 out of 4 times. This was not surprising, given that English experts probably had at least some background knowledge of poetry.

Most strikingly, English experts were far more likely to get a perfect score. Only 14 out of 77 (18%) respondents correctly identified the author of all four poems. The English experts were far more likely to do so, with 11 out of 39 (28%) English experts vs. only 3 out of 38 (8%) AI experts scoring perfectly.

I wanted to find out how the perfect scorers did it, and asked about their methodology.

English experts who scored perfectly noticed rhyming, overused literary devices, clichés, and logical flaws. Larry Flynn, Writing Instructor at the University of Massachusetts said, “The rhyme schemes [of the AI poems] made me a bit dubious…since many poets today don’t use a very traditional end-rhyme, I thought those pieces may have been trying to create the ‘idea’ of a poem instead of an actual poem.” Karen Tiegel, Middle School Head at The Nueva School (and former English teacher of 20 years), “recognized the style of a couple of the poems” and “also found that the AI-generated poems overused literary devices, especially similes and metaphors!” Jennifer Paull, Writing & Research Center Director at The Nueva School, often saw elements “that seemed particularly clichéd or illogical but not metaphorically justified (you don’t drink an ember, for instance).”

AI experts who scored perfectly noticed simplicity and rhyming issues, carelessness, and just plain “bad writing.” David Orr, Director of Engineering at Google DeepMind, has spent a lot of time working on LLMs, so he has a feeling when he sees most AI written text now. But sometimes, he sees specific clues. Bard’s poem “had a clear tell — it rhymed cloak with cloak, which is not something a human poet would do. Also the rhyme scheme was not consistent: mostly ABCB, but sometimes ABAB; again, I think a human would have been more careful.” Chat-GPT’s poem also had tells. “‘Each stroke bold, yet tenderly bold’ is honestly just bad writing. The final line, which carries a lot of weight in a poem, also didn’t really make sense. Dreams aren’t found at daybreak, they’re throughout the night. I thought that a human poet would rethink that as the ending.” Ted Hart, Data Science Manager at Apple, based his guesses on “the simplicity and amount of rhyming in the poem.”

Besides using process of elimination to eliminate poems they felt were obviously the work of AI, perfect scorers also pointed out qualities of the human poem that made it “human.” Flynn felt that the human poem “had the most innovative free form, so I felt it was most likely to be human-generated.” Orr thought the human poem “was super obviously human, I’ve never seen an LLM do interesting things with line breaks and visuals. This is probably coming at some point, but I knew immediately that this one was ‘real.’”

English experts were more discerning in their quality ratings

English experts exhibited a higher level of discernment in their quality ratings than AI experts. When the AI experts’ ratings were removed from the quality ratings, the rating of the human poem went up, and the ratings of the AI poems went down. English experts rated the human poem 7.2/10 on average, compared with Bard’s poem at 6.1/10, ChatGPT’s poem at 5.4/10, and Claude’s poem at 5.0/10, as shown by the blue bars in Figure 4. (The AI experts-only ratings are shown in green.) On average, the English experts rated the human poem 0.6 points higher than the AI experts. On average the English experts rated Bard’s, ChatGPT-4’s, and Claude’s poems 0.3, 0.8, and 0.9 points lower, respectively, than the AI experts.

That said, because AI experts generally would have less knowledge and expertise of poetry than English experts, I think it’s a fair assumption that the English experts’ quality ratings are more accurate. Either way, the human wins here–and by an even higher margin when judged solely by the English experts.

Figure 4

Conclusions

AI poetry isn’t there yet

Overall, most respondents could correctly detect which poems were written by AI, and also rated them lower in quality. And this was for a relatively superficial topic without any deeper meaning, whereas the topics of most poems nowadays relate to far more complex topics such as human emotion or social justice. Perhaps more importantly, the human poem was written by an 8th grader, not a professional poet. Therefore, it should not be that challenging to compete with.

That said, AI poetry is not bad — a number of survey respondents expressed that the survey was more challenging than they had expected. Some of the poems were more difficult to guess than others. Even perfect guessers like Orr had to rely on gut feel for one of the poems, which he felt “was pretty good, and I think was the one I was most uncertain about. But I thought that with only a little work it could be better, like breaking apart the three sections a bit more clearly.” (Interestingly enough, this was Claude’s poem, which had the lowest percentage of respondents who believed it was human.) Flynn pointed out that there is an element of reverse psychology involved in guessing. Although he attributed the poems with traditional end-rhyme to AI, he also thought, “then again, this is what a human might have been trying to do too — to mimic the classical form. Surprising and, yes, challenging!” Orr believes that “Overall LLM poetry has gotten quite good very rapidly. I’m not sure I’ll be able to tell in a year or two.”

Doomsayers are forecasting the end of careers in writing/creative fields, similarly to when calculators and computers were invented. But at least for now, calculators and computers haven’t replaced humans — they’ve become tools. Similarly, at least in its current forms, AI can really only serve as a part of the toolkit for writers/creatives, saving them time (as long as they carefully review and edit) on their writing/creative projects.

…but English experts can help

The most fascinating observation I made was that English experts were far better able to discern which poems were written by AI — 11 English experts vs. only 3 AI experts guessed perfectly for all 4 poems. Given their greater expertise in poetry, English experts were also more discerning in their quality rating than AI experts, rating the human poem higher than the AI poems in quality by a higher margin than the AI experts did. This all points to a need to have English experts on AI product teams collaborating with AI experts, to help refine the product and define what consists of high quality output. After all, it is difficult to build a product without knowing what the gold standard is.

Poet (and perfect scorer) Lee Rossi explained this point by comparing AI to a beginning poet: “Many beginning poets think they have to be ‘poetic,’ which means they use words and images they remember from their reading rather than relying on their own experience and sense of language. In other words, they write like an AI and not like a poet.”

Yes, this leads to the ironic question — if English experts help develop AI algorithms, aren’t they then digging their own grave? In the short term, I think that having professional writers help work on AI teams to improve future versions of AI has real benefit to both writers and the AI community. Better versions of AI free up writers to develop richer, more creative ideas and projects. It will allow them to more efficiently edit their works. And in the longer term, I am cautiously optimistic that AI will open up new paths and opportunities for creative work. What exactly this will turn out to be will be constantly evolving (and deserving of its own separate study). But it’s important for creatives to be flexible and able to pivot, and isn’t that already part of the DNA of the creative mind anyway?

Will AI eventually take over human roles (or even humans)? Possibly, but at least until then, it is clear that English experts are needed to help develop, test, and further refine AI algorithms to help improve AI writing quality. Rather than fight against AI, writers today should play an active role in shaping the future of AI, not just on the policy side, but on the technical side.

(Thank you to all survey respondents and Maya Bodnick for their help with this post.)

Update: On 2/8/2024, Google Bard was replaced with Gemini. Given that Bard won first place both in quality rating and in ability to fool respondents, I expected an improvement in Gemini over Bard. Most recently, I tried entering the same prompt in Gemini to see if there was any obvious degradation, and that poem (which can be found at the bottom of this article) surprisingly seemed to be slightly worse, as it further overused metaphors and similes. If any survey respondents or anyone else would like to comment on this, I would be very interested to hear your thoughts!

Daybreak by Claude

Golden rays peek over the horizon

Casting warmth on the sleeping land

The dark blanket of night recoils

As dawn’s light advances across the sky

Rosy hues dance and shimmer

Across low-hanging clouds

The sleeping world begins to stir

Creatures emerge and morning songs arise

A new day dawns, bright and full of promise

The rising sun banishes the dark

And illuminates a new beginning

Symphony of Sunrise by Bard

The first brushstroke of dawn,

A soft orange bled across the canvas of night,

Pushing back the inky blackness,

Inch by inch, like fingers of light.

Silhouettes stir on the horizon’s edge,

Mountains waking from their slumber,

Trees stretching their branches towards the gold,

Drinking in the warmth like a long, cool ember.

A symphony of birdsong erupts,

A joyous chorus greeting the newborn day,

As the sun, a fiery chariot, crests the hill,

Scattering diamonds across the morning’s gray.

The world stretches, yawns, throws off its cloak,

Dew-kissed grass sparkling in the newborn light,

And shadows retreat, chased by the sun’s warm cloak,

Vanishing into corners, day’s gentle might.

The air hums with the promise of new beginnings,

A fresh start etched in the sky’s vast blue,

As the sun climbs higher, painting the clouds with wings,

And the world basks in the golden hue.

So let us rise with the sunrise, hearts ablaze,

Embrace the day with open arms outstretched,

For in this symphony of light and shade, our lives interweave,

In the tapestry of dawn, beautifully etched.

Sunkissed by Sierra Elman (an 8th grade human)

The rooster warbles.

I watch as the sun kisses

the horizon. Delicately —

like the earth would split

open with too much passion.

I watch as gold light embraces

my view. It glosses over the sky —

darkness fades into baby blue

with fingerprints of violet & cotton

candy pink. I breathe in the honey air.

I memorize this moment, when

the sun blesses the land & chases away

the ghosts of night.

Whispers of Dawn by ChatGPT-4

In the hush of dawn’s first light,

A canvas unfolds in the east,

Whispering in hues of gentle might,

A spectacle, a visual feast.

Crimson bleeds into saffron gold,

Splashing the sky with a painter’s grace,

Each stroke bold, yet tenderly bold,

In the quiet world’s waking space.

The sun, a fiery orb on the rise,

Climbs with a slow, steady pace,

Banishing night’s lingering guise,

With warmth in its radiant embrace.

Birds trill in a chorus so sweet,

Welcoming the day’s fresh start,

In this moment, where earth and heaven meet,

Nature’s masterpiece, a work of heart.

The horizon, a blend of dream and light,

Speaks of hope, of beginnings anew,

In the transient beauty of morning’s sight,

Life finds a rhythm, steady and true.

Sunrise, a promise in the sky,

A daily rebirth, a world’s sigh,

In these moments before the day’s high,

Lies the quiet magic, where dreams lie.

Blooming Embers by Gemini (generated 2/15/24)

First blush, a hesitant hand paints

Across the canvas of night,

Pushing back the charcoal shades,

A silent, gentle fight.

Indigo bleeds to violet,

Kissed by gold and amethyst,

Stars, one by one, surrender,

Whispering their final tryst.

Horizon’s line, a fiery seam,

Embers burst in crimson bloom,

Sunlight spills, a liquid dream,

Chasing shadows to their doom.

Trees etched black, now clothed in green,

Diamonds scattered on the dew,

A symphony of birds unseen,

Welcoming the morning hue.

World awakens, bathed in light,

Hope unfurls on fragile wings,

A new day born, vibrant and bright,

The song of sunrise gently sings.