Tweets often contain URLs or links to a variety of content on the web, including images, videos, news articles and blog posts. SpiderDuck is a service at Twitter that fetches all URLs shared in Tweets in real-time, parses the downloaded content to extract metadata of interest and makes that metadata available for other Twitter services to consume within seconds.

Several teams at Twitter need to access the linked content, typically in real-time, to improve Twitter products. For example:

- Search to index resolved URLs and improve relevance

- Clients to display certain types of media, such as photos, next to the Tweet

- Tweet Button to count how many times each URL has been shared on Twitter

- Trust & Safety to aid in detecting malware and spam

- Analytics to surface a variety of aggregated statistics about links shared on Twitter

Background

Prior to SpiderDuck, Twitter had a service that resolved all URLs shared in Tweets by issuing HEAD requests and following redirects. While this service was simple and met the needs of the company at the time, it had a few limitations:

- It resolved the URLs but did not actually download the content. The resolution information was stored in an in-memory cache but not persisted durably to disk. This meant that if the in-memory cache instance was restarted, data would be lost.

- It did not implement politeness rules typical of modern bots, for example, rate limiting and following robots.txt directives.

Clearly, we needed to build a real URL fetcher that overcame the above limitations and would meet the company’s needs in the long term. Our first thought was to use or build on top of an existing open source URL crawler. We realized though that almost all of the available crawlers have two properties that we didn’t need:

- They are recursive crawlers. That is, they are designed to fetch pages and then recursively crawl the links extracted from those pages. Recursive crawling involves significant complexity in crawl scheduling and long term queuing, which isn’t relevant to our use case.

- They are optimized for large batch crawls. What we needed was a fast, real-time URL fetcher.

Therefore, we decided to design a new system that could meet Twitter’s real-time needs and scale horizontally with its growth. Rather than reinvent the wheel, we built the new system largely on top of open source building blocks, thus still leveraging the contributions of the open source community.

This is typical of many engineering problems at Twitter – while they resemble problems at other large Internet companies, the requirement that everything work in real-time introduces unique and interesting challenges.

System Overview

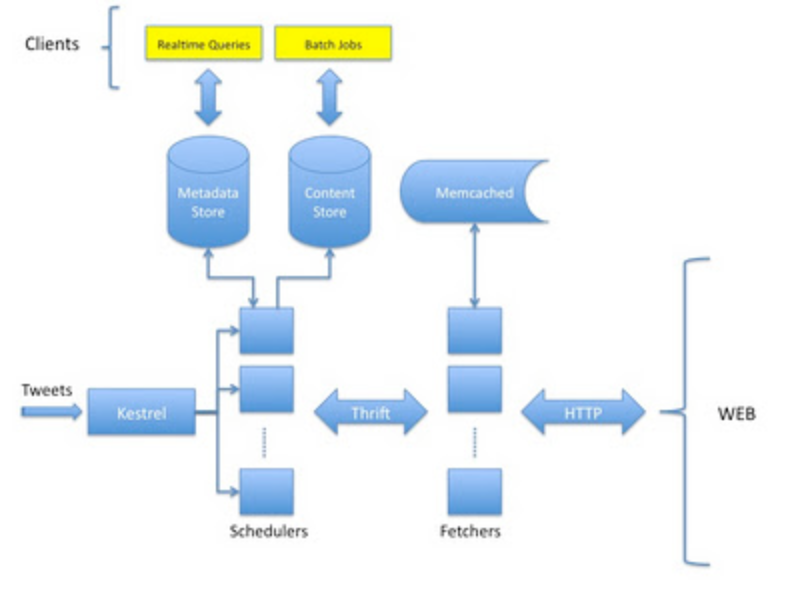

Here’s an overview of how SpiderDuck works. The following diagram illustrates its main components.

Kestrel: This is message queuing system widely used at Twitter for queuing incoming Tweets.

Schedulers: These jobs determine whether to fetch a URL, schedule the fetch, follow redirect hops if any. After the fetch, they parse the downloaded content, extract metadata, and write the metadata to the Metadata Store and the raw content to the Content Store. Each scheduler performs its work independently of the others; that is, any number of schedulers can be added to horizontally scale the system as Tweet and URL volume grows.

Fetchers: These are Thrift servers that maintain short-term fetch queues of URLs, issue the actual HTTP fetch requests and implement rate limiting and robots.txt processing. Like the Schedulers, Fetchers scale horizontally with fetch rate.

Memcached: This is a distributed cache used by the fetchers to temporarily store robots.txt files.

Metadata Store: This is a Cassandra-based distributed hash table that stores page metadata and resolution information keyed by URL, as well as fetch status for every URL recently encountered by the system. This store serves clients across Twitter that need real-time access to URL metadata.

Content Store: This is an HDFS cluster for archiving downloaded content and all fetch information.

We will now describe the two main components of SpiderDuck — the URL Scheduler and the URL Fetcher — in more detail.

The URL Scheduler

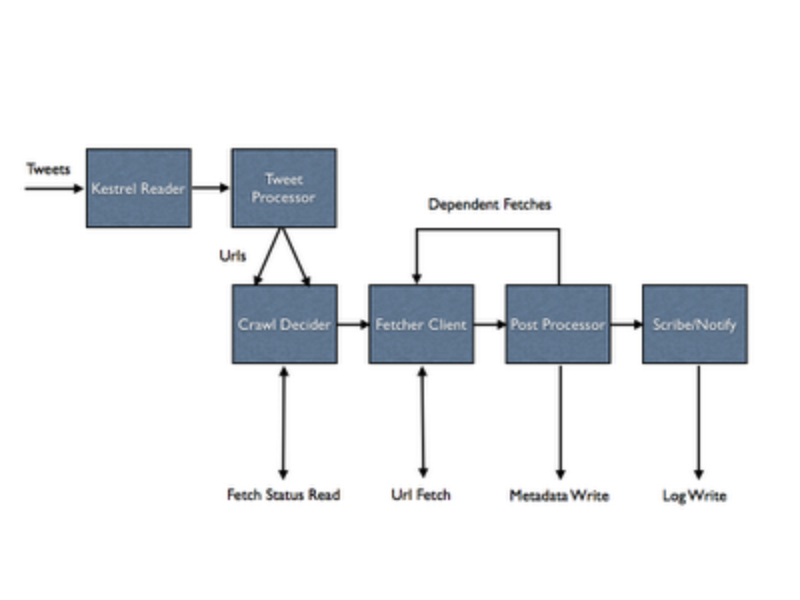

The following diagram illustrates the various stages of processing in the SpiderDuck Scheduler.

Like most of SpiderDuck, the Scheduler is built on top of an open source asynchronous RPC framework developed at Twitter called Finagle. (In fact, this was one of the earliest projects to utilize Finagle.) Each box in the diagram above, except for the Kestrel Reader, is a Finagle Filter – an abstraction that allows a sequence of processing stages to be easily composed into a fully asynchronous pipeline. Being fully asynchronous allows SpiderDuck to handle high throughput with a small, fixed number of threads.

The Kestrel Reader continuously polls for new Tweets. As Tweets come in, they are sent to the Tweet Processor, which extracts URLs from them. Each URL is then sent to the Crawl Decider stage. This stage reads the Fetch Status of the URL from the Metadata Store to check if and when SpiderDuck has seen the URL before. The Crawl Decider then decides whether the URL should be fetched based on a pre-defined fetch policy (that is, do not fetch if SpiderDuck has fetched it in the past X days). If the Decider determines to not fetch the URL, it logs the status to indicate that processing is complete. If it determines to fetch the URL, it sends the URL to the Fetcher Client stage.

The Fetcher Client stage uses a client library to talk to the Fetchers. The client library implements the logic that determines which Fetcher will fetch a given URL; it also handles the processing of redirect hops. (It is typical to have a chain of redirects because URLs posted on Twitter are often shortened.) A context object is associated with each URL flowing through the Scheduler. The Fetcher Client adds all fetch information including status, downloaded headers, and content into the context object and passes it on to the Post Processor. The Post Processor runs the extracted page content through a metadata extractor library, which detects page encoding and parses the page with an open-source HTML5 parser. The extractor library implements a set of heuristics to retrieve page metadata such as title, description, and representative image. The Post Processor then writes all the metadata and fetch information into the Metadata Store. If necessary, the Post Processor can also schedule a set of dependent fetches. An example of dependent fetches is embedded media, such as images.

After post-processing is complete, the URL context object is forwarded to the next stage that logs all the information, including full content, to the Content Store (HDFS) using an open source log aggregator called Scribe. This stage also notifies interested listeners that the URL processing is complete. The notification uses a simple Publish-Subscribe model, which is implemented using Kestrel’s fanout queues.

All processing steps are executed asynchronously – no thread ever waits for a step to complete. All state related to each URL in flight is stored in the context object associated with it, which makes the threading model very simple. The asynchronous implementation also benefits from the convenient abstractions and constructs provided by Finagle and the Twitter Util libraries.

The URL Fetcher

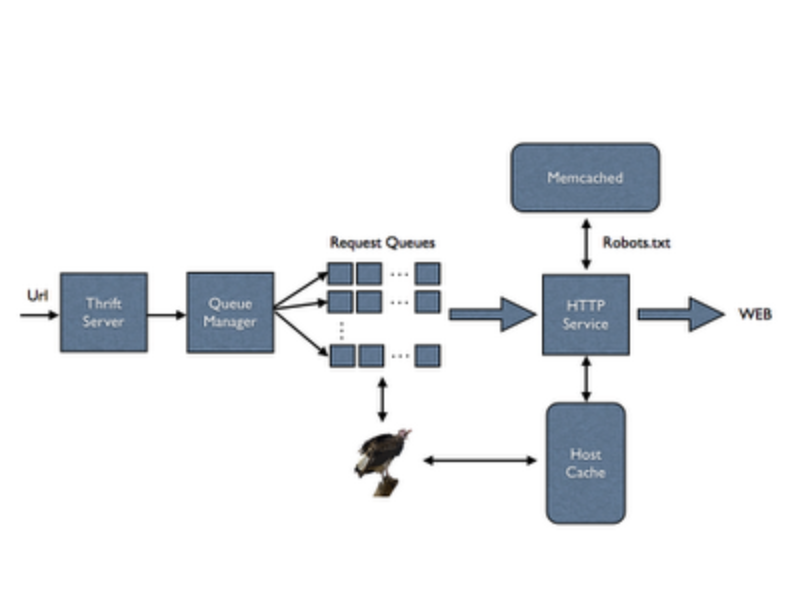

Let’s take a look at how a Fetcher processes a URL.

The Fetcher receives the URL through its Thrift interface. After basic validation, the Thrift handler passes the URL to a Request Queue Manager, which assigns it to the appropriate Request Queue. A scheduled task drains each Request Queue at a fixed rate. Once the URL is pulled off of its queue, it is sent to the HTTP Service for processing. The HTTP service, built on top of Finagle, first checks if the host associated with the URL is already in its cache. If not, it creates a Finagle client for it and schedules a robots.txt fetch. After the robots.txt is downloaded, the HTTP service fetches the permitted URL. The robots.txt file itself is cached, both in the in-process Host Cache as well as in Memcached to prevent its re-fetch for every new URL that the Fetcher encounters from that host.

Tasks called Vultures periodically examine the Request Queues and Host Cache to find queues and hosts that haven’t been used for a period of time; when found, they are deleted. The Vultures also report useful stats through logs and the Twitter Commons stats exporting library.

The Fetcher’s Request Queue serves an important purpose: rate limiting. SpiderDuck rate limits outgoing HTTP fetch requests per-domain so as not to overload web servers receiving requests. For accurate rate limiting, SpiderDuck ensures each Request Queue is assigned to exactly one Fetcher at any point of time, with automatic failover to a different Fetcher in case the assigned Fetcher fails. A cluster suite called Pacemaker assigns Request Queues to Fetchers and manages failover. URLs are assigned to Request Queues based on their domains by a Fetcher client library. The default rate limit used for all web sites can be overriden on a per-domain basis, as needed. The Fetchers also implement queue backoff logic. That is, if URLs are coming in faster than they can be drained, they reject requests to indicate to the client to backoff or take other suitable action.

For security purposes, the Fetchers are deployed in a special zone in Twitter data centers called a DMZ. This means that the Fetchers cannot access Twitter’s production clusters and services. Hence, it is all the more important to keep them lightweight and self contained, a principle which guided many aspects of the design.

How Twitter uses SpiderDuck

Twitter services consume SpiderDuck data in a number of ways. Most query the Metadata Store directly to retrieve URL metadata (for example, page title) and resolution information (that is, the canonical URL after redirects). The Metadata Store is populated in real-time, typically seconds after the URL is tweeted. These services do not talk directly to Cassandra, but instead to SpiderDuck Thrift servers that proxy the requests. This intermediate layer provides SpiderDuck the flexibility to transparently switch storage systems, if necessary. It also supports an avenue for higher level API abstractions than what would be possible if the services interacted directly with Cassandra.

Other services periodically process SpiderDuck logs in HDFS to generate aggregate stats for Twitter’s internal metrics dashboards or conduct other types of batch analyses. The dashboards help us answer questions like “How many images are shared on Twitter each day?” “What news sites do Twitter users most often link to?” and “How many URLs did we fetch yesterday from this specific website?”

Note that services don’t typically tell SpiderDuck what to fetch; SpiderDuck fetches all URLs from incoming Tweets. Instead, services query information related to URLs after it becomes available. SpiderDuck also allows services to make requests directly to the Fetchers to fetch arbitrary content via HTTP (thus benefiting from our data center setup, rate limiting, robots.txt support and so on), but this use case is not common.

Performance numbers

SpiderDuck processes several hundred URLs every second. A majority of these are unique over the time window defined by SpiderDuck’s fetch policy, and hence get fetched. For URLs that get fetched, SpiderDuck’s median processing latency is under two seconds, and the 99th percentile processing latency is under five seconds. This latency is measured from Tweet creation time, which means that in under five seconds after a user clicked “Tweet,” the URL in that Tweet is extracted, prepared for fetch, all redirect hops are retrieved, the content is downloaded and parsed, and the metadata is extracted and made available to clients via the Metadata Store. Most of that time is spent either in the Fetcher Request Queues (due to rate limiting) or in actually fetching from the external web server. SpiderDuck itself adds no more than a few hundred milliseconds of processing overhead, most of which is spent in HTML parsing.

SpiderDuck’s Cassandra-based Metadata Store handles close to 10,000 requests per second. Each request is typically for a single URL or a small batch (around 20 URLs), but it also processes large batch requests (200-300 URLs). The store’s median latency for reads is 4-5 milliseconds, and its 99th percentile is 50-60 milliseconds.

Acknowledgements

The SpiderDuck core team consisted of the following folks: Abhi Khune, Michael Busch, Paul Burstein, Raghavendra Prabhu, Tian Wang and Yi Zhuang. In addition, we’d like to acknowledge the following folks, spanning many teams across the company, who contributed to the project either directly, by helping with components SpiderDuck relies on (for example, Cassandra, Finagle, Pacemaker and Scribe) or with its unique data center setup: Alan Liang, Brady Catherman, Chris Goffinet, Dmitriy Ryaboy, Gilad Mishne, John Corwin, John Sirois, Jonathan Boulle, Jonathan Reichhold, Marius Eriksen, Nick Kallen, Ryan King, Samuel Luckenbill, Steve Jiang, Stu Hood and Travis Crawford. Thanks also to the entire Twitter Search team for their invaluable design feedback and support. If you want to work on projects like this, join the flock!

- Raghavendra Prabhu (@omrvp), Software Engineer