Image Source: Business Of Fashion

The AI world shook recently with Apple’s unveiling of an advanced multimodal system called Ferret that exceeds GPT-4 in key computer vision tasks. This groundbreaking AI has the potential to revolutionize how machines see and understand images and text together.

Let’s unpack how Ferret works its magic and why it marks a new phase in the AI race between tech titans.

How Apple’s Ferret System Works

How Apple’s New Ferret Model Works?

The Ferret system utilizes multiple components to understand both visual and textual inputs:

Visual Analysis with CLIP ViT

- Uses the CLIP ViT model to analyze images and convert visual information into a format the AI can comprehend

- Identifies objects, shapes, and other details in the image

Language Understanding

- Analyzes text prompts to convert them into a format the system can process

- Understands references to specific objects or regions in the accompanying image

Referring Expression Comprehension

- Combines the visual and textual information

- Precisely locates objects referred to in text prompts within the image

- Provides detailed descriptions of the identified objects/regions

By integrating computer vision and natural language processing, Ferret offers unparalleled accuracy in breaking down complex visual scenes and responding to detailed prompts.

How Ferret Compares to GPT-4

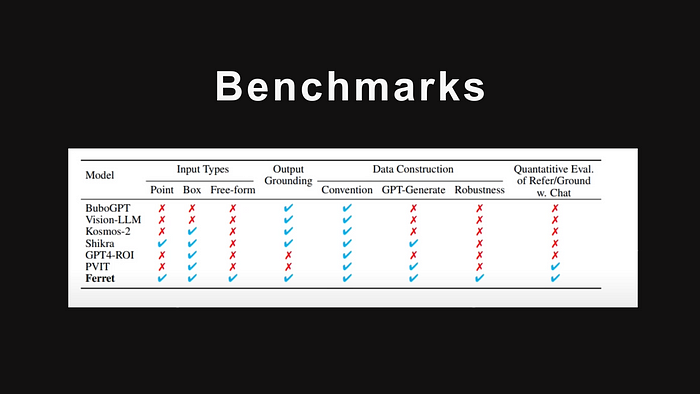

Benchmarks tests against other Multimodal models

Apple tested Ferret against GPT-4 and found it superior in some key areas around multimodal comprehension. Here’s an overview:

Referring Accuracy

- Ferret more accurately identifies and describes small, precise regions of images based on textual prompts

- GPT-4 struggles with small details but handles high-level scene understanding well

Object Grounding

- Ferret precisely locates even tiny objects within complex images

- GPT-4 fails to accurately localize small objects in crowded visual environments

On referral benchmarks outlined in Apple’s paper, Ferret outperformed specialized models like GPT-4 ROI and Google’s Cosmos. It also exceeded GPT-4 Vision in side-by-side testing on referring expressions.

Why Ferret Excels Where GPT-4 Falters

GPT-4 is an incredibly capable AI system, but Apple’s Ferret shines in areas where GPT-4 falls short:

Precision Referring

- Ferret focuses entirely on cross-modal comprehension without distractions from other tasks

- Allows extremely detailed, accurate multimodal understanding

Specialized Architecture

- Optimized for fine-grained analysis of images, especially crowded and complex scenes

- Purpose-built to locate and describe small, precise regions of images

By specializing in detailed visual comprehension, Ferret fills an important gap in AI capabilities while GPT-4 takes a more generalized approach.

The Significance of Apple’s Achievement

The introduction of Ferret has major implications for the future of AI:

Pushing Boundaries of Multimodal AI

- Sets a new standard for detailed, real-world visual understanding in AI systems

- Major milestone in developing advanced multimodal intelligence

Applications Across Industries

- Could significantly improve computer vision systems for autonomous vehicles by better recognizing objects in complex driving scenarios

- Useful for detailed image annotation, VR/AR, visual chatbots, and more

Competitive Pressure in AI Industry

- Establishes Apple as an innovator in AI amidst competition from Google, Meta, Microsoft

- Lights a fire under big tech to further improve multimodal comprehension abilities

By surpassing capacities of the mighty GPT-4, Apple shows it’s a serious contender in cutting-edge AI research and development. This raises the bar for tech giants racing to unlock artificial general intelligence.

What This Means for Apple’s AI Ambitions

The launch of the formidable Ferret model provides clues into Apple’s emerging AI strategy:

Upgrading Siri with Advanced Generative AI

- Rumors of “Apple GPT” — an internal GPT-style model to massively upgrade Siri, iOS typing suggestions, and other language features

- Ferret hints at Apple’s accelerated investment into transformer language models

Lead in Multimodal AI capacities

- Ferret proves Apple’s machine learning research is industry-leading

- Expect a focus on excelling at visual AI comprehension abilities

Integration Across Apple Product Line

- Sophisticated AI like Ferret makes way for new premium product capabilities

- AR/VR, cameras, autonomous systems could see upgrades fueled by multimodal AI

With game-changing research like Ferret underway, Apple is gearing up to unleash some seriously advanced AI capabilities.

The Outlook for GPT-4 vs Apple in AI

While models like GPT-4 still dominate in key language tasks, Apple’s specialized approach gives it an edge in multimodal intelligence.

GPT-4’s Persisting Strengths

- More general knowledge about concepts, objects, and reasoning

- Superior conversational ability and linguistic mastery

Apple’s Differentiation

- Leadership in computer vision, visual referring expressions

- Tight integration of NLP and CV optimized for Apple devices

As GPT-4 improves via massive scale and data, expect Apple to lean into areas like video, images, and cross-modal tasks. With heavy investment into both spheres, exciting innovation lies ahead!

Frequently Asked Questions — FAQs

What is Ferret, and how does it differ from GPT-4?

Ferret is Apple’s advanced multimodal AI system, excelling in detailed visual comprehension, surpassing GPT-4 in specific benchmarks.

How does Ferret impact Apple’s Siri and other language features?

Ferret hints at a significant upgrade for Siri and iOS typing suggestions, showcasing Apple’s accelerated investment in transformer language models.

What are the potential applications of Ferret in industries beyond AI?

Ferret’s applications range from enhancing computer vision in autonomous vehicles to improving image annotation, VR/AR, and visual chatbots.

How does Apple differentiate itself in the AI race against GPT-4?

While GPT-4 excels in general language tasks, Apple’s Ferret leads in computer vision, visual referring expressions, and tight integration of NLP and CV.

What clues does Ferret provide about Apple’s AI strategy?

Ferret suggests Apple’s focus on excelling at visual AI comprehension, with potential integration across its product line, including AR/VR, cameras, and autonomous systems.

How does Ferret contribute to the evolution of AI systems in the real world?

The introduction of Ferret signifies a new phase in AI, showcasing human-like mastery in perceiving and reasoning about the real world, placing Apple at the forefront of this progress.

Conclusion

The introduction of Apple’s Ferret system marks a new phase in the artificial intelligence race between tech titans. By surpassing GPT-4 in key multimodal benchmarks, Apple asserts itself as a leader in AI capabilities specialized for detailed visual sense-making. As Google, Microsoft, and others respond with beefed up computer vision transformations of their own, Apple appears determined to compete blow for blow in cutting-edge machine learning. If models like Ferret are any indication, we are approaching AI systems with ever more human-like mastery of perceiving and reasoning about the messy real world around us. And Apple now stands firmly at the vanguard of that progress.

This article was originally published on AIFocussed.com